In case you did not notice that yet, Dynamics 365 9.0 ships features that will allow developers to achieve really powerful custom components, working across all D365-supported devices. Unified Interface – as its name suggests – works pretty much the same on all devices, which means that we are no longer sandboxed by some “metadata rebuilding in progress” nightmare (people who used the old mobile app understand what I mean by that). Personally I believe that Custom Control Framework will be something that will really make D365 shine as a modern technology, but so far we don’t know when it would be available (hopefully we will learn something more about it on Extreme 365, so see you there!). Waiting for that big thing I was playing a little bit with the new mobile App. Right now it’s super easy on mobile to add picture, video or recorded audio as an attachment to a record. While adding a note you will see the icons representing each of these:

Clicking camera will of course open your camera app and will attach resulting picture to annotation, microphone will open a recorder etc.

I had recently a question from my customer – they were creating a sound notes all the time and there was a person dedicated for rewriting them as text in CRM. Can we simplify that? Using some fancy AI technology, we can!.

If we create a sound note on our mobile device, it will be attached as a .wav file. So we can use some kind of speech recognition system to convert this audio file into text. My first choice was Azure Cognitive Services and Bing Speech API:

https://azure.microsoft.com/en-us/services/cognitive-services/speech/

It has 30 days free trial and has a REST API which can convert up to 15 seconds long audio files (only .wav files, unfortunately). Although I’m a fan of Microsoft technology, to be host Google Speech API looks much more mature:

https://cloud.google.com/speech/

Really big number of available languages and no constraints on audio files length and many more available formats is something that looks really impressive and sets the bar high for Microsoft to catch up. There are also many other speech recognition systems, so simply choose what’s best for you. I’ve chosen Azure Cognitive Services for the sake of this PoC.

Having said all that, now we only have to write a plugin that will call our service and convert the audio file when annotation is created:

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| public class ConvertSpeechToTextPlugin : IPlugin | |

| { | |

| public void Execute(IServiceProvider serviceProvider) | |

| { | |

| var context = (IPluginExecutionContext)serviceProvider.GetService(typeof(IPluginExecutionContext)); | |

| var tracingService = (ITracingService)serviceProvider.GetService(typeof(ITracingService)); | |

| var organizationServiceFactory = (IOrganizationServiceFactory)serviceProvider.GetService(typeof(IOrganizationServiceFactory)); | |

| var orgService = organizationServiceFactory.CreateOrganizationService(null); | |

| tracingService.Trace("Plugin started"); | |

| var target = context.InputParameters["Target"] as Entity; | |

| var postImage = context.PostEntityImages["PostImage"] as Entity; | |

| if(target.LogicalName == "annotation" && (target.GetAttributeValue<bool?>("isdocument") ?? false)) | |

| { | |

| tracingService.Trace("Processing annotation"); | |

| var mimetype = target.GetAttributeValue<string>("mimetype"); | |

| tracingService.Trace("Mimetype: " + mimetype); | |

| if (mimetype == "audio/wav") | |

| { | |

| tracingService.Trace("Getting document body"); | |

| var documentBody = target.GetAttributeValue<string>("documentbody"); | |

| tracingService.Trace("Convert body to bytes"); | |

| var byteContent = Convert.FromBase64String(documentBody); | |

| tracingService.Trace("Create request"); | |

| HttpWebRequest request = null; | |

| request = (HttpWebRequest)WebRequest.Create("https://speech.platform.bing.com/speech/recognition/interactive/cognitiveservices/v1?language=en-us&format=detailed"); | |

| request.SendChunked = true; | |

| request.Accept = @"application/json;text/xml"; | |

| request.Method = "POST"; | |

| request.ProtocolVersion = HttpVersion.Version11; | |

| request.ContentType = @"audio/wav; codec=audio/pcm; samplerate=16000"; | |

| request.Headers["Ocp-Apim-Subscription-Key"] = "subscription_key_here"; | |

| using (Stream requestStream = request.GetRequestStream()) | |

| { | |

| requestStream.Write(byteContent, 0, byteContent.Length); | |

| requestStream.Flush(); | |

| using (var responseStream = new StreamReader(request.GetResponse().GetResponseStream())) | |

| { | |

| tracingService.Trace("Read response"); | |

| string response = responseStream.ReadToEnd(); | |

| var result = response.FromJSON<ServiceResponse>(); | |

| if(result.RecognitionStatus == "Success") | |

| { | |

| tracingService.Trace("Recognition successfull"); | |

| var bestConfidence = result.NBest.FirstOrDefault(n => n.Confidence == result.NBest.Max(r => r.Confidence)); | |

| var toUpdate = new Entity("annotation"); | |

| toUpdate.Id = target.Id; | |

| toUpdate["notetext"] = bestConfidence?.Lexical; | |

| orgService.Update(toUpdate); | |

| } | |

| } | |

| } | |

| } | |

| } | |

| } | |

| } |

ServiceResponse class looks like this:

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| public class ServiceResponse | |

| { | |

| public string RecognitionStatus { get; set; } | |

| public int Offset { get; set; } | |

| public int Duration { get; set; } | |

| public Nbest[] NBest { get; set; } | |

| } | |

| public class Nbest | |

| { | |

| public float Confidence { get; set; } | |

| public string Lexical { get; set; } | |

| public string ITN { get; set; } | |

| public string MaskedITN { get; set; } | |

| public string Display { get; set; } | |

| } |

FromJSON is just an extension method that uses JSON.NET library to deserialize JSON string to my object (no I’m not merging JSON.NET library using IlMerge or any other merging technique which I try to avoid in most cases – I always attach the full source code of this great library in my plugins – recently almost every project I’m working on, requires some kind of serialization/deserialization inside plugins – mostly for HTTP API calls).

This plugin is registered on Annotation creation message. We just check, if annotation contains an attachment and if the type of attachment is “audio/wav” we call the Bing Speech API to convert this audio file to text. The response looks like this:

Believe me or not, I actually said “Hello world” when I was making the sound note. So basically the result says that it’s 92,5% sure that I said “Hello world” and 79% that I said “Hello”. There can be more matches of course – how we handle those matches is a different story. We can prepare some sort of machine learning algorithm, maybe use something that’s already there on Azure or maybe some neural network algorithm – whatever you like. I’m simply taking the match with the highest Confidence parameter, I guess that in many cases it will be wrong, but for a PoC it’s good enough for me.

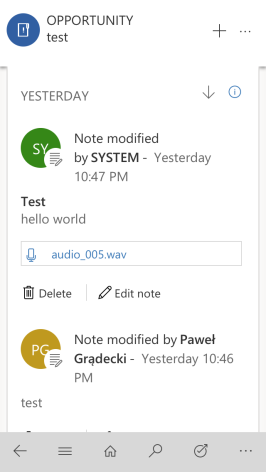

And as a result we get our note with audio file and text in the note text:

It works 🙂 Happy coding!

Nice! I got it working on my instance as well. Although I had a bit trouble in deserializing JSON it finally works. Thanks for sharing this! Cheers.

LikeLike